Many tech giants now have to risky bet on AI due to coronavirus

Our Today’s discussion will surround on the workers at Facebook and Google – the content moderators who are working on the pandemic on every single day. It’s a story about the difficult tradeoffs and the actions taken over the past few days that will be going to have a significant effect on the businesses on the future.

When we come to the history of it, the content moderation on social network was nothing more than a business problem let in the nudity and the Nazis and the community collapses. Later on, it became a legal and regulatory problem. The companies had the legal obligation to remove terrorist propaganda, child abuse imager and other forms of content. After the services like Google and Facebook grew, the users base in billions, the content moderation become a large scale problem. The obvious question arises how one can review the millions of posts a day that get reported for the violation.

The only solution, that I think is to outsource the job consultancies companies. In 2016, when deficit of content moderators was revealed, tech companies hired tens of thousands of moderators around the world. This created a privacy issue – when moderators work in house, they can apply restriction, and control and device and access the data stored. If they work for any third party, there is a risk of data leakage.

This fear of data leaks ran strong that time. For the Facebook in particular, the post 2016 election backlash had arisen partly over the privacy concerns – if the world learned that the data are gleaned from the users’ accounts, the trust in the company would plunged precipitously. This is the reason why the outsourced content moderation sites for the Facebook and YouTube were designed as secure rooms. Employees work on production floors that are badge in and out of. They have restriction on bringing any personal devices lest they take some surreptitious photos or attempt to smuggle out the data. The scene is that, if any one brings phones onto the production floor, he/she is fired for it. Many of the workers complaint about such restrictions but the companies have been willing no relaxation to them due to the fear of high profile data loss.

Fast forward to today, when the pandemic is spreading around the world at the frightening speed, we still need to work like the moderators, if not- the usage will clearly be surging. If you continue them to work on the floor, you most probability contributes to the spread of the disease. The problem is that if you let them work from home, you invite a privacy disaster. This creates a dilemma.

What do you do if you are in the place of the Facebook? Until Monday, the answer looked a lot like business as usual. Sam Biddle broke this story in the Intercept last week:

“Discussions from Facebook’s internal employee forum reviewed by The Intercept reveal a state of confusion, fear, and resentment, with many precariously employed hourly contract workers stating that, contrary to statements to them from Facebook, they are barred by their actual employers from working from home, despite the technical feasibility and clear public health benefits of doing so.

The discussions focus on Facebook contractors employed by Accenture and WiPro at facilities in Austin, Texas, and Mountain View, California, including at least two Facebook offices. (In Mountain View, a local state of emergency has already been declared over the coronavirus.) The Intercept has seen posts from at least six contractors complaining about not being able to work from home and communicated with two more contractors directly about the matter. One Accenture employee told The Intercept that their entire team of over 20 contractors had been told that they were not permitted to work from home to avoid infection.”

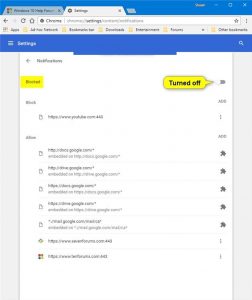

As per the Biddle notes, Facebook was already encouraging employees to work from home. It began to inform the contract moderators that they should not come into the office. The Facebook will pay in the case of disruption. Here is the announcement:

“For both our full-time employees and contract workforce there is some work that cannot be done from home due to safety, privacy and legal reasons. We have taken precautions to protect our workers by cutting down the number of people in any given office, implementing recommended work from home globally, physically spreading people out at any given office and doing additional cleaning. Given the rapidly evolving public health concerns, we are taking additional steps to protect our teams and will be working with our partners over the course of this week to send all contract workers who perform content review home, until further notice. We’ll ensure that all workers are paid during this time.”

Similar announcement was followed from Google on Sunday. This was then followed by a joint announcement from Facebook, Google, Linkedin, Microsoft, Reddit, Twitter and the YouTube. So, now the moderators have sent home. How does the stuff get moderated? Facebook allowed some moderators who are working on the less sensitive content to work from home. More sensitive work is being shifted to full time employees. But, the companies now begin learning more on machine learning system in an effort to automate content moderation.

For the long term goal, every social network has to put artificial intelligence in charge. However, as the Google told about this on this view that the day when such a thing would be possible was still quite far away. Yet on Monday, the company changed its tune. Here is what the Jake kastrenakes at The Verge said:

“YouTube will rely more on AI to moderate videos during the coronavirus pandemic, since many of its human reviewers are being sent home to limit the spread of the virus. This means videos may be taken down from the site purely because they’re flagged by AI as potentially violating a policy, whereas the videos might normally get routed to a human reviewer to confirm that they should be taken down. […]

Because of the heavier reliance on AI, YouTube basically says we have to expect that some mistakes are going to be made. More videos may end up getting removed, “including some videos that may not violate policies,” the company writes in a blog post. Other content won’t be promoted or show up in search and recommendations until it’s reviewed by humans.

YouTube says it largely won’t issue strikes — which can lead to a ban — for content that gets taken down by AI (with the exception of videos it has a “high confidence” are against its policies). As always, creators can still appeal a video that was taken down, but YouTube warns this process will also be delayed because of the reduction in human moderation.”

On Monday evening, both Facebook and Twitter followed the suit. Here is the Paresh Dave in Reuters:

“Facebook also said the decision to rely more on automated tools, which learn to identify offensive material by analyzing digital clues for aspects common to previous takedowns, has limitations.

“We may see some longer response times and make more mistakes as a result,” it said.

Twitter said it too would step up use of similar automation, but would not ban users based solely on automated enforcement, because of accuracy concerns.”

The day one result was not great, here the Josh Constine in TechCrunch:

“Facebook appears to have a bug in its News Feed spam filter, causing URLs to legitimate websites including Medium, BuzzFeed, and USA Today to be blocked from being shared as posts or comments. The issue is blocking shares of some coronavirus-related content, while some unrelated links are allowed through, though it’s not clear what exactly is or isn’t tripping the filter. Facebook has been trying to fight back against misinformation related to the outbreak, but may have gotten overzealous or experienced a technical error.”

Facebook says this is not because of changed in content moderation. Meantime, one of the moderators told me, “We were working with people who were exposed, definitely”. I think they have moved too late, and the actions initially taken were clearly insufficient.